tomcat的线程池为什么不回落?

现象

从监控上看,tomcat的线程busy的非常少,线程池使用率很低,但是线程池里的线程的个数却很多。

难道tomcat的线程池没有回落机制吗?

1 | [arthas@22]$ mbean | grep -i thread |

几个关键点:

- currentThreadsBusy 2

- currentThreadCount 916

- maxThreads 2000

- minSpareThreads 25

干活的线程只有2个,但是线程池里有916个线程?why?

多次观察,仍然是这个情况。

原因

mbean数据来源

先搞清楚mbean的数据来源。

1 | // org.apache.tomcat.util.net.AbstractEndpoint#init |

currentThreadBusy——当前有任务的线程个数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15// org.apache.tomcat.util.net.AbstractEndpoint#getCurrentThreadsBusy

public int getCurrentThreadsBusy() {

Executor executor = this.executor;

if (executor != null) {

if (executor instanceof ThreadPoolExecutor) {

return ((ThreadPoolExecutor) executor).getActiveCount();

} else if (executor instanceof ResizableExecutor) {

return ((ResizableExecutor) executor).getActiveCount();

} else {

return -1;

}

} else {

return -2;

}

}currentThreadCount——线程池中,当前线程个数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15// org.apache.tomcat.util.net.AbstractEndpoint#getCurrentThreadCount

public int getCurrentThreadCount() {

Executor executor = this.executor;

if (executor != null) {

if (executor instanceof ThreadPoolExecutor) {

return ((ThreadPoolExecutor) executor).getPoolSize();

} else if (executor instanceof ResizableExecutor) {

return ((ResizableExecutor) executor).getPoolSize();

} else {

return -1;

}

} else {

return -2;

}

}maxThreads——最大线程数

1

2

3

4

5

6

7

8// org.apache.tomcat.util.net.AbstractEndpoint#getMaxThreads

public int getMaxThreads() {

if (internalExecutor) {

return maxThreads;

} else {

return -1;

}

}minSpareThreads——核心线程数

1

2

3

4

5

6

7

8

9

10

11// org.apache.tomcat.util.net.AbstractEndpoint#getMinSpareThreads

public int getMinSpareThreads() {

return Math.min(getMinSpareThreadsInternal(), getMaxThreads());

}

private int getMinSpareThreadsInternal() {

if (internalExecutor) {

return minSpareThreads;

} else {

return -1;

}

}

默认线程池初始化逻辑:

1 | // org.apache.tomcat.util.net.AbstractEndpoint#createExecutor |

看到线程池的初始化,就会发现miniSpareThreads其实就是corePoolSize! 而且有一个写死的keepAliveTime 60s。而且任务队列是个无界的队列。

线程池的keepAliveTime

先看JDK中的注释:

@param keepAliveTime when the number of threads is greater than

the core, this is the maximum time that excess idle threads

will wait for new tasks before terminating.

简单来说,就是超过核心数的线程,如果等待keepAliveTime,还没有接到任务,就会被终止掉。

看一眼实现:

1 | // java.util.concurrent.ThreadPoolExecutor#runWorker |

从源码上看,这个keepAliveTime并没有什么问题。

ReentrantLock

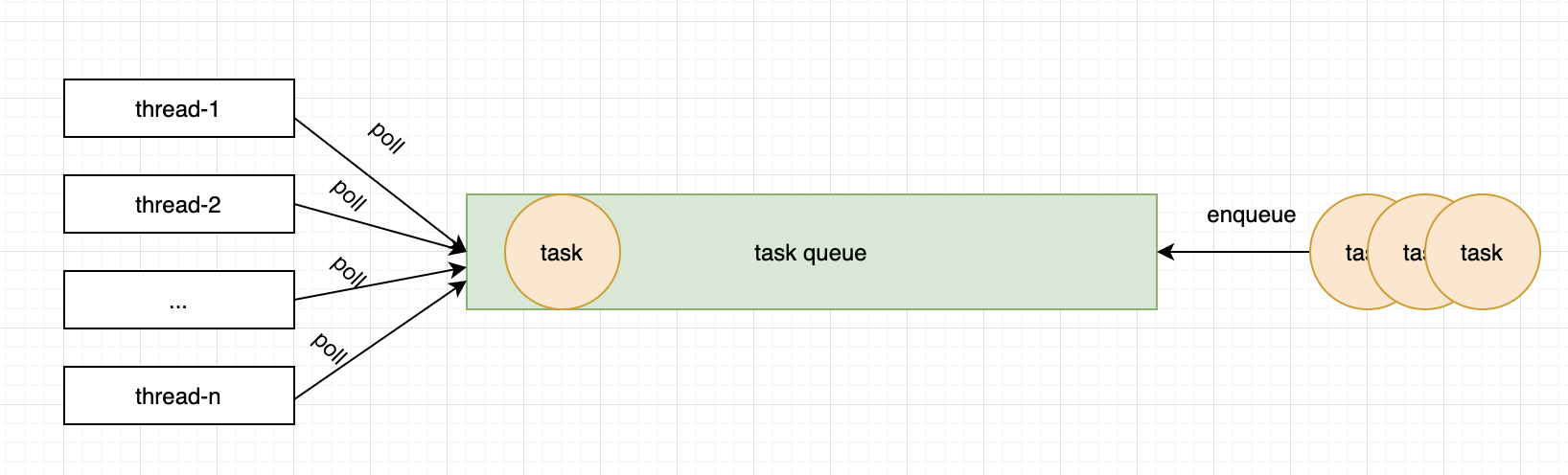

有没有一种可能,task queue的poll是雨露均撒的?

When you have eliminated the impossible, whatever remains, however improbable, must be the truth.

tomcat使用的TaskQueue作为队列,继承自LinkedBlockingQueue。但是核心的poll逻辑,还是用的LinkedBlockingQueue:

1 | // org.apache.tomcat.util.threads.TaskQueue#poll |

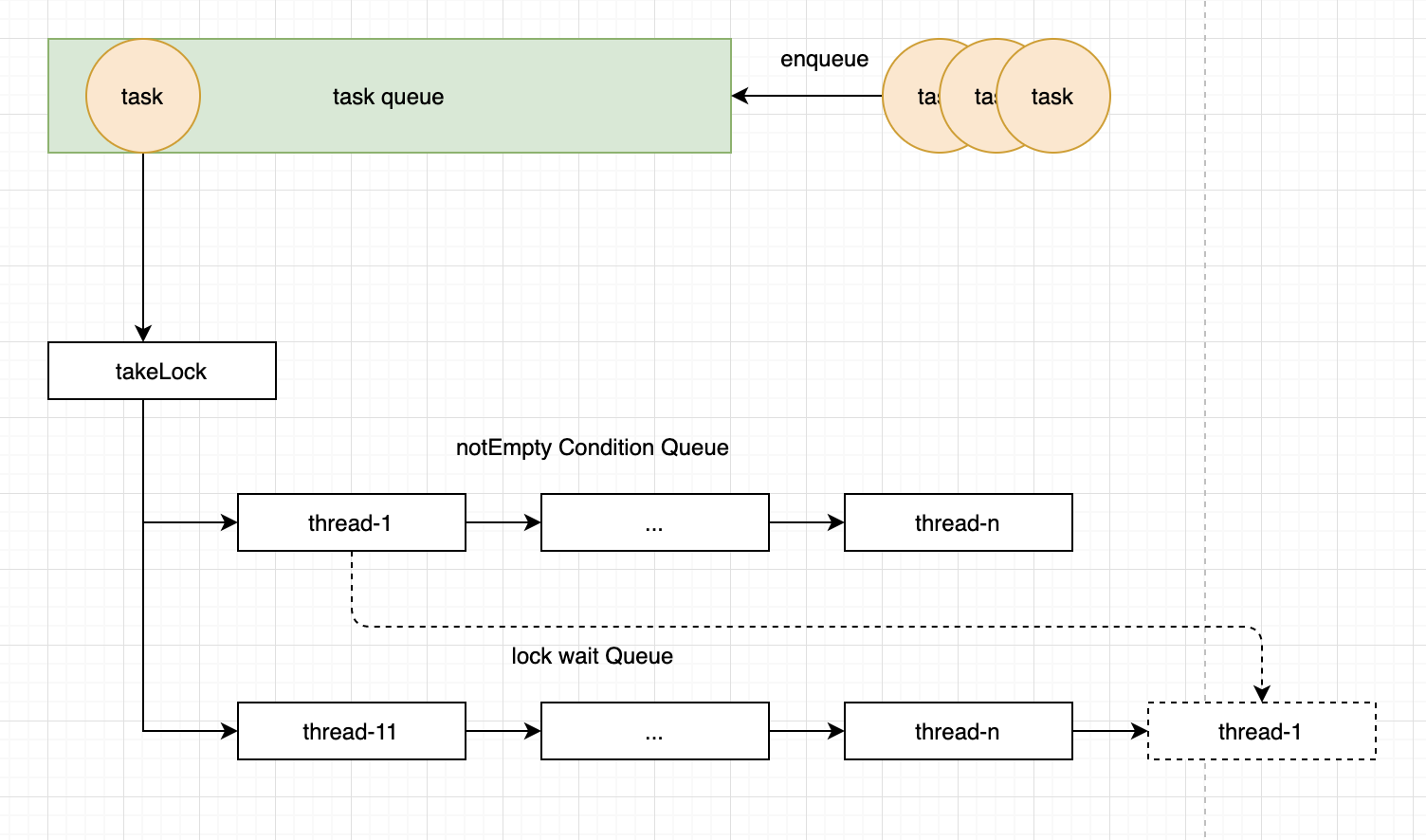

核心就在takeLock和notEmpty上,takeLock是ReentrantLock默认非公平,notEmpty是takeLock的条件队列。

1 | // java.util.concurrent.LinkedBlockingQueue |

ReentrantLock默认非公平的,底层基于AQS实现。公平和非公平的区别只是在首次抢锁的行为上,首次如果没有抢到,都是排队,然后按顺序解锁。

1 | // java.util.concurrent.locks.ReentrantLock.Sync#nonfairTryAcquire |

qps比较低的场景下,锁的竞争并不激烈,大部分线程即使抢到了锁,也拿不到任务,只能在条件队列中。

1 | // java.util.concurrent.locks.AbstractQueuedLongSynchronizer.ConditionObject#signal |

条件队列里是按排队的顺序(longest-waiting thread)去通知的,将条件队列里的wait node转移到锁的等待队列中,重新竞争锁。

此时竞争的对象很少,基本就是busy的线程+被notify唤醒的线程,因此大概率还是能抢到任务的。

实验

问题的根源在于如果task很少,大家会在notEmpty的Condition队列中排队;task来的时候,又是按顺序解锁,如果qps和keepAliveTime合适,在keepAliveTime时间内,每个worker线程都能有机会至少活得一个task,从而不会被回收掉。

顺序排队

maxThreads设置为10,打印每次处理的线程的名称,测试代码:

1 |

|

串行curl 7次:

1 | for i in `seq 1 10`; do curl "http://localhost:8087/web_war_exploded/hello" && echo -e '\n'; done; |

输出:

1 | ➜ conf for i in `seq 1 10`; do curl "http://localhost:8087/web_war_exploded/hello" && echo -e '\n'; done; |

确实是类似round robin的形式来的

线程回落

tomcat默认的线程池,keepAliveTime是60s,修改maxThreads为10,minSpareThreads为3。

启动之后,mbean输出:

1 | [arthas@98537]$ mbean Catalina:type=ThreadPool,name=* |

跟设置一致,先来波高峰请求,创建出来10个worker(maxThreads)

1 | for i in `seq 1 10`; do curl -s "http://localhost:8087/web_war_exploded/hello" & done; |

此时mbean输出:

1 | [arthas@98537]$ mbean Catalina:type=ThreadPool,name=* |

currentThreadCount有10个了,等1min,然后再看:

1 | [arthas@98537]$ mbean Catalina:type=ThreadPool,name=* |

currentThreadCount已经回落到了3个(minSpareThreads)

线程不回落

线程不回落,只用保证每个线程1min内有一个task就行了。maxThreads是10,也就是10 qpm就行了。

先冲高

1 | for i in `seq 1 10`; do curl -s "http://localhost:8087/web_war_exploded/hello" & done; |

再维持10 qpm

1 | for i in `seq 1 100000`; do curl -s "http://localhost:8087/web_war_exploded/hello" && echo "-n" && sleep 5; done; |

代码里sleep了1s,加上curl的sleep 5s,一个请求6s,一分钟10个请求。此时再看mbean输出:

1 | [arthas@98537]$ mbean Catalina:type=ThreadPool,name=* |

一直是10,跟线上的现象一样,复现了线程不回落的情形。

修改sleep的时间,降低qpm,看看是否有部分回落:

1 | for i in `seq 1 100000`; do curl -s "http://localhost:8087/web_war_exploded/hello" && echo "-n" && sleep 7; done; |

逐渐回落至8个线程:

1 | [arthas@98537]$ mbean Catalina:type=ThreadPool,name=* | grep -i currentThreadCount |

解决方案

QPS的临界值是maxThreads / keepAliveTime,考虑上请求的处理时间,实际值可能稍微大一点。大于临界值则不会发生线程的回落,小于临界值会逐渐回落。

- 调整keepAliveTime

Tomcat使用默认的线程池,keepAliveTime是无法调整的,但是可以使用自定义的线程池,可以设置maxIdleTime(即keepAliveTime)。

1 | <!--The connectors can use a shared executor, you can define one or more named thread pools--> |

调整为10s之后,维持10qpm,很快就回落了:

1 | [arthas@54257]$ mbean Catalina:type=ThreadPool,name=* | grep -i currentThreadCount |

总结

- tomcat的线程池使用TaskQueue控制请求的分发,poll的逻辑和父类LinkedBlockingQueue一致

- LinkedBlockingQueue内部,如果没有task时,poll的线程都会在notEmpty的ReentrantLock的Condition队列中,按序排队

- 任务来时,signal操作是按队列里的顺序唤醒的,先入先出

- qps > maxThreads / keepAliveTime,可以保证在keepAliveTime,每个线程都有机会获得task,从而避免被回收

- tomcat默认的线程池,不支持设置keepAliveTime,可以使用自定义的线程池解决

- JDK的线程池同样有这个问题,需要注意keepAliveTime的设置

- 频繁的线程切换,会导致频繁的上下文切换,对性能应该也有影响

- 对于线上的服务,一般会有探活机制,也是线程不回落的原因之一